Introduction

Bandwidth selection has become a cornerstone for optimizing data center performance and efficiency. As data centers serve as the backbone of modern digital services, understanding the key factors influencing bandwidth choices is essential for IT professionals, network engineers, and data center managers. Decisions regarding bandwidth affect operational capabilities and have significant implications for overall cost management and service reliability.

Several elements converge to shape the decision-making process surrounding bandwidth in a data center environment. From assessing current and anticipated data traffic demands to considering connectivity options that ensure both redundancy and security, each factor plays a pivotal role in achieving optimal system performance. Moreover, evaluating the continued impact of emerging technologies—such as cloud computing and IoT—on bandwidth requirements adds another layer of complexity to this critical selection process. As we delve deeper into these influencing factors, it becomes clear that an informed approach can substantially elevate both efficiency and resilience in today’s increasingly interconnected world.

Understanding Bandwidth Requirements

Assessing current and future data traffic needs is essential for effective bandwidth selection in data centers. As organizations grow, the volume of data generated and transmitted typically increases, necessitating a scalable and flexible approach to bandwidth management. For instance, a financial service firm that recently adopted real-time analytics may experience higher data throughput due to the intense processing required by its applications. To avoid network congestion or latency issues during critical operations, estimating not only present requirements but also potential future spikes driven by business expansion or new technology adoption is crucial.

Evaluating different applications and their bandwidth consumption patterns offers valuable insights into specific bandwidth needs. Different applications—such as video streaming services, cloud-based tools, or customer relationship management systems—consume varying amounts of bandwidth under various conditions. For example, a streaming platform hosting live events demands high bandwidth during broadcasts to maintain video quality while consuming significantly less during idle periods. A thorough analysis of these consumption patterns ensures that sufficient capacity is allocated according to actual operational demands rather than merely relying on theoretical projections.

Identifying peak usage times further aids in determining necessary capacity within a data center. Businesses often face fluctuation in traffic depending on user behavior or external factors such as seasonal promotions or global events affecting internet traffic. Organizations can pinpoint when demand surges occur by utilizing monitoring tools to analyze traffic data over time. This insight allows for more precise planning regarding bandwidth provisioning; for example, an e-commerce site experiencing heavy load during holiday sales would require temporary increases in bandwidth availability to maintain optimal performance without service interruptions. Properly addressing these dynamic requirements contributes significantly to performance optimization and cost efficiency in effectively managing data center resources.

Types of Connectivity Options

The most common connection types utilized in data center environments include Ethernet, fiber optics, and (occasionally) wireless technologies. Each option has unique characteristics that can significantly influence bandwidth selection and overall network infrastructure. Ethernet provides a reliable and cost-effective solution, particularly for shorter distances within a single data center; however, it may exhibit limitations regarding speed over longer spans. Fiber optics, on the other hand, delivers high-speed data transmission across extensive distances with minimal loss, making it ideal for core networking requirements. Wireless technologies offer flexibility and convenience but tend to be less reliable and may not support the heavy loads typically associated with large-scale data operations.

When comparing these connectivity options in a data center environment, fiber optics will stand out as the superior choice due to its ability to handle large amounts of data at remarkable speeds—often exceeding 100 Gbps—without electromagnetic interference. For instance, many enterprises rely on dense wave division multiplexing (DWDM) technology to maximize their fiber networks by allowing multiple signals to travel simultaneously over a single optical fiber. In contrast, while Ethernet cables have developed into advanced variants such as Cat6a or Cat7—which can accommodate speeds up to 10 Gbps over short distances—they may not sustain performance levels required for demanding applications as effectively as fiber optics.

Each type of connectivity must be evaluated against future growth projections. Fiber optics inherently offers greater scalability due to advancements in technology that allow existing infrastructure to support increasing bandwidth demands without requiring significant physical changes. For example, upgrading network capabilities might only entail replacing transceivers rather than laying new cabling. Conversely, while Ethernet is widely adopted for its cost-effectiveness today, its capacity might limit an organization’s ability to adapt if technological evolution calls for rapid enhancements in network speed or bandwidth availability.

Ultimately, choosing between Ethernet, fiber optics, or wireless connections requires careful consideration of both current needs and long-term strategies. Organizations must balance factors such as installation costs, maintenance considerations, potential bottlenecks under peak usage conditions, and adaptability towards evolving technological trends like cloud computing developments or surges in IoT devices demanding increased bandwidth capacities. Selecting an appropriate connectivity type can lay the groundwork for future-proofing a data center’s infrastructure while ensuring optimal performance levels today.

Redundancy Considerations

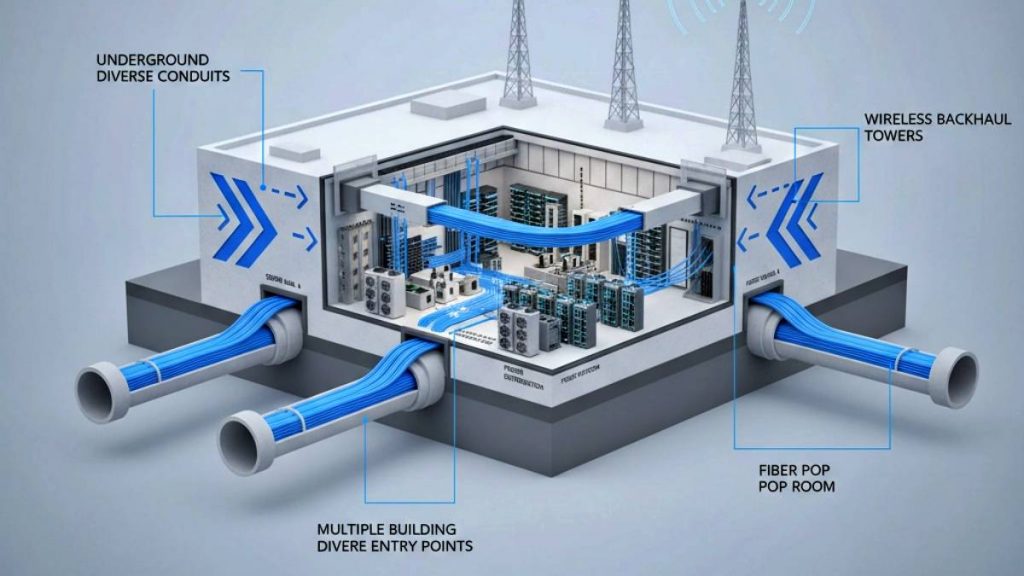

Redundancy is fundamental to network reliability, particularly in data centers where continuous uptime is paramount. With the increasing reliance on digital services, even brief downtimes can have significant financial repercussions and damage an organization’s reputation. Implementing redundancy ensures that if one component fails, another can seamlessly take over without disrupting service. This involves redundant hardware and connections and configurations that allow for quick recovery from failures.

To maintain high availability, data center managers often employ various failover strategies. One common approach is to use active-passive configurations, where a backup system remains idle until it is needed to take over when the primary system fails. For example, having dual power supplies in critical servers or redundant internet connections via multiple ISPs can minimize risk. Another strategy includes active-active configurations, where all systems operate simultaneously and share the load; if one fails, others continue to provide service without noticeable interruption. Employing these failover systems can reduce risk and enhance overall performance.

Geographic redundancy also plays a vital role in fostering network resilience. By distributing resources across different locations, organizations can safeguard against regional disturbances—be they natural disasters or man-made crises—that could impact local operations. For instance, financial institutions often utilize data centers situated in separate geographic zones to ensure compliance with regulations and protect sensitive transaction data from being compromised by localized outages. Such geographical distributions will necessitate careful planning regarding bandwidth requirements between sites to facilitate real-time data replication and communications.

Ultimately, incorporating redundancy into bandwidth selection mitigates risks and fosters stakeholders’ confidence that business operations will remain uninterrupted under various conditions. As technology continues to evolve, so too must these strategies adapt to address emerging threats and ensure robust infrastructure capable of meeting future demands. By prioritizing redundancy considerations as part of overall bandwidth strategy planning, IT professionals position their organizations for sustained operational success amid growing challenges in the digital landscape.

Cost Analysis of Bandwidth Selection

Comprehensive cost analysis is essential when selecting bandwidth for a data center. Different connectivity options come with varying price tags, influenced by factors such as technology type, service provider agreements, and installed infrastructure. For instance, while traditional copper Ethernet might offer lower initial costs, fiber optics typically provides superior performance and scalability that can justify higher upfront investments. To make informed choices, managers must break down costs associated with both initial setup and ongoing operational expenses.

Balancing performance needs against budgetary constraints requires an understanding of the organization’s goals. For example, an e-commerce company experiencing rapid growth may opt to invest in higher bandwidth solutions to support traffic surges during peak seasons like Black Friday. Conversely, a small startup might prioritize cost-efficiency over high-speed access initially but could face crippling slowdowns if traffic exceeds expectations. Thus, data center operators must look beyond immediate expense and consider the ramifications of inadequate bandwidth on performance and user experiences.

Moreover, the long-term financial implications of under- or over-provisioning bandwidth cannot be overstated. Insufficient capacity can lead to bottlenecked data flow, resulting in slower applications and potentially harming customer satisfaction; this situation may necessitate costly emergency upgrades or migration to more robust systems sooner than planned. On the other hand, over-provisioning presents its own challenges—while it safeguards against potential issues from sudden spikes in network traffic, it also contributes to wasteful spending. Therefore, employing techniques such as predictive modeling or usage monitoring will aid IT decision-makers in accurately forecasting their bandwidth needs without incurring further financial burdens.

In conclusion, conducting a thorough cost analysis around bandwidth selection helps determine the best-fit solution for current circumstances and places organizations on stable footing for future adjustments. By weighing initial costs against projected performance outcomes and devising strategies for sustainable capacity management, decision-makers can foster resilient data centers prepared for evolving demands while safeguarding their budgets effectively.

Impact of Network Architecture

The architecture of a network plays a foundational role in determining the bandwidth requirements within data centers. An organization’s network layout dictates how data flows between servers, storage, and clients, impacting overall performance. For instance, a flat network topology may enable faster data access across nodes but can lead to congestion if not designed with sufficient bandwidth capacity. Conversely, a hierarchical network structure might improve manageability and scalability by dividing traffic into manageable segments; however, it can also introduce latency if the interconnections are under-provisioned.

Routing protocols further influence bandwidth efficiency by optimizing the path that data takes through the network. Protocols like Open Shortest Path First (OSPF) and Border Gateway Protocol (BGP) help in dynamically calculating the most efficient routes for data packets. These optimizations are critical during peak usage times when traffic is heavy and can significantly reduce bottlenecks. For example, using BGP to prioritize key applications over less critical ones helps ensure that essential services receive the necessary bandwidth even under heavy loads.

As organizations increasingly adopt virtualized infrastructures alongside traditional physical setups, considerations surrounding bandwidth planning become more complex. Virtual environments often have fluctuating workloads that require flexible and scalable bandwidth solutions. Data center managers need to account for these variances when selecting bandwidth options; strategies like implementing software-defined networking (SDN) can offer greater agility in allocating resources as demand changes. Additionally, an integrated approach that considers both virtual and physical components could promote better utilization of available bandwidth while reducing costs associated with over-provisioning or frequent hardware upgrades.

In conclusion, an organization’s network architecture serves as a blueprint for defining its bandwidth needs. From layout considerations to routing efficiencies and hybrid infrastructure challenges, every aspect impacts how effectively data flows within a data center. As such, careful evaluation of these elements is crucial for optimizing performance and ensuring future scalability amid evolving technological demands.

Security Implications

Bandwidth selection plays a critical role in shaping the security protocols and measures implemented within a data center. As organizations increasingly rely on rapid data transfer and real-time processing, the choice of bandwidth directly affects how effectively security solutions can function. For instance, when high-bandwidth options are utilized, advanced security measures such as intrusion detection systems (IDS) and encryption protocols have greater efficacy; they can process and analyze more data without significant delays. In contrast, limiting bandwidth may hinder these systems’ performance, resulting in potential vulnerabilities where threats can go undetected.

Assessing risks associated with chosen bandwidth options is essential for maintaining a secure environment. High-bandwidth connections often facilitate large-scale data transfers that could become targets for cyberattacks. For example, Distributed Denial-of-Service (DDoS) attacks overwhelm network resources by flooding them with excessive traffic; thus, selecting higher capacity bandwidth without proper DDoS mitigation strategies can leave a data center exposed. Therefore, understanding the depth of security implications associated with each bandwidth option allows IT professionals to create immunity against potential vulnerabilities while ensuring adequate resource allocation for crucial protective measures.

Compliance requirements also play a significant role in determining suitable bandwidth for data center operations. Organizations must adhere to various industry standards—such as HIPAA for healthcare or PCI-DSS for payment card transactions—which impose stringent regulations concerning data transmission security, which may dictate the required bandwidth to meet compliance benchmarks. Failure to comply jeopardizes an organization’s reputation and invites financial penalties and legal consequences. Hence, carefully balancing necessary bandwidth needs against compliance stipulations becomes integral in avoiding service disruptions while upholding regulatory mandates.

In conclusion, the interplay between bandwidth decisions and the broader context of cybersecurity emphasizes the need for comprehensive assessments during planning stages in data centers. By taking into consideration how their choices influence existing security protocols, potential vulnerabilities exposed by those choices, and necessary compliance requirements tied to specific regulations, organizations can establish robust frameworks that safeguard their critical assets while optimizing operational efficiency across their network infrastructure.

Future Trends in Bandwidth Management

As technology continues to evolve, the demands for bandwidth in data centers are being reshaped by emerging technologies such as the Internet of Things (IoT) and cloud computing. The proliferation of IoT devices generates vast amounts of data that need real-time processing and transmission. For instance, a smart city initiative could involve thousands of connected sensors collecting data on traffic patterns, air quality, and energy usage simultaneously. This scenario necessitates robust connectivity and substantial bandwidth capacity to manage increased traffic effectively without diminishing service quality or network performance. Similarly, cloud computing offers opportunities for scalable resources that can adapt based on user demand but also requires significant bandwidth to facilitate seamless access to applications and data hosted off-site.

Predictive analytics plays a critical role in anticipating future bandwidth needs. By leveraging historical usage data and trends, IT professionals can make informed decisions about their bandwidth requirements before bottlenecks occur. For example, a data center might analyze seasonal spikes in web traffic; if an e-commerce business typically experiences a surge during holiday seasons, predictive analytics can help preemptively scale up bandwidth to accommodate this influx without causing downtime or degraded performance. Moreover, implementing machine learning algorithms can optimize resource allocation dynamically by continuously analyzing real-time usage patterns and adjusting bandwidth accordingly.

Adapting to evolving user demands is essential for maintaining competitive advantage in today’s rapidly changing technological landscape. As remote work becomes increasingly common, businesses require flexible solutions that allow users to access applications from various locations while ensuring optimal performance. Furthermore, advancements in technologies like 5G are anticipated to revolutionize mobile broadband capabilities—enabling faster speeds and lower latency—influencing how organizations design their networks around these emerging standards. Data centers must remain agile and responsive to these external pressures and internal shifts driven by organizational changes, consumer behavior evolution, or new application deployments.

In conclusion, understanding future trends in bandwidth management is vital for optimizing data center performance as technology advances. The intersection between IoT innovations and cloud solutions will continue shaping connectivity necessities while predictive analytics drives proactive planning strategies. Successfully navigating these changes will require ongoing assessment and adaptability among IT professionals dedicated to ensuring efficiency amid ever-growing demands on bandwidth resources.

Conclusion

In conclusion, selecting the appropriate bandwidth for a data center deployment is a multifaceted decision influenced by various critical factors. Key considerations include:

- Assessing current and projected data traffic needs.

- Understanding the types of connectivity options available.

- Evaluating redundancy strategies for improved reliability.

- Conducting thorough cost analyses.

- Addressing security implications that shape bandwidth decisions.

Moreover, the architecture of an organization’s network influences its overall performance and capacity requirements.

As technology advances and user demands evolve, IT professionals and data center managers must routinely reassess their bandwidth selection strategies. By staying informed about emerging trends and adjusting plans accordingly, organizations can ensure optimal performance and future-proof against potential disruptions or inefficiencies in their network operations. An ongoing commitment to evaluation will empower these decision-makers to adapt swiftly to changes in technology infrastructure and user expectations while maintaining a robust and efficient data center environment.